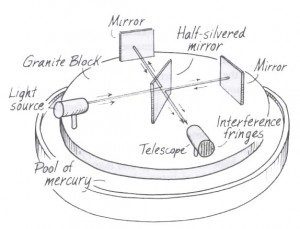

The Michelson interferometer continues to be used in many scientific experiments. It is well known for the famous Michelson-Morley experiment of 1887, which sought to measure the earth’s motion through the supposed luminiferous aether that most physicists at the time believed was the medium in which light waves propagated.

The field of radio astronomy owes a great debt to the famously failed Michelson–Morley experiment, which set out in 1887 to characterize the effects of mysterious luminiferous “aether wind” on the speed of light. One of science’s more fruitful failures, it tilled the ground for Einstein’s special theory of relativity.

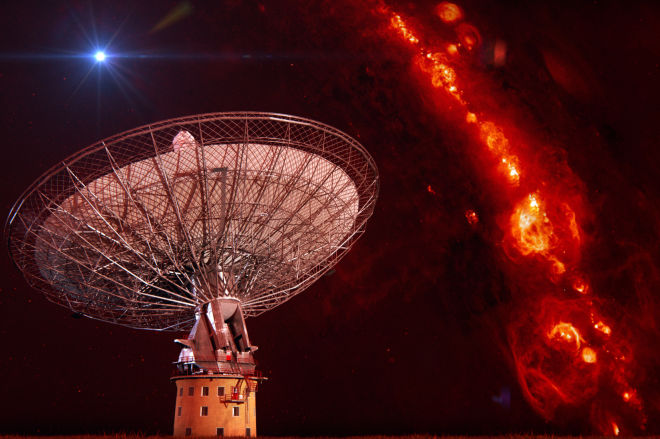

At the center of the experiment was Michelson’s ingenious invention, the interferometer, the basic concept of which is still at work in modern radio telescope arrays—and still revealing, if not always solving, the deep mysteries of the cosmos.

Interferometry allows radio astronomers to not only amplify distant signals, but increase their resolution, providing ever greater degrees of detail on otherwise murky phenomena. This is a great challenge, because radio waves emanating from space are exceptionally weak. So weak, in fact, that astronomers at the Parkes Observatory, northwest of Sydney, Australia, put it this way: “The power received from a strong cosmic radio source… is about a hundredth of a millionth of a millionth of a Watt (10-14 W). If you wanted to heat water with this power it would take about 70,000 years to heat one drop by one degree Celsius.”

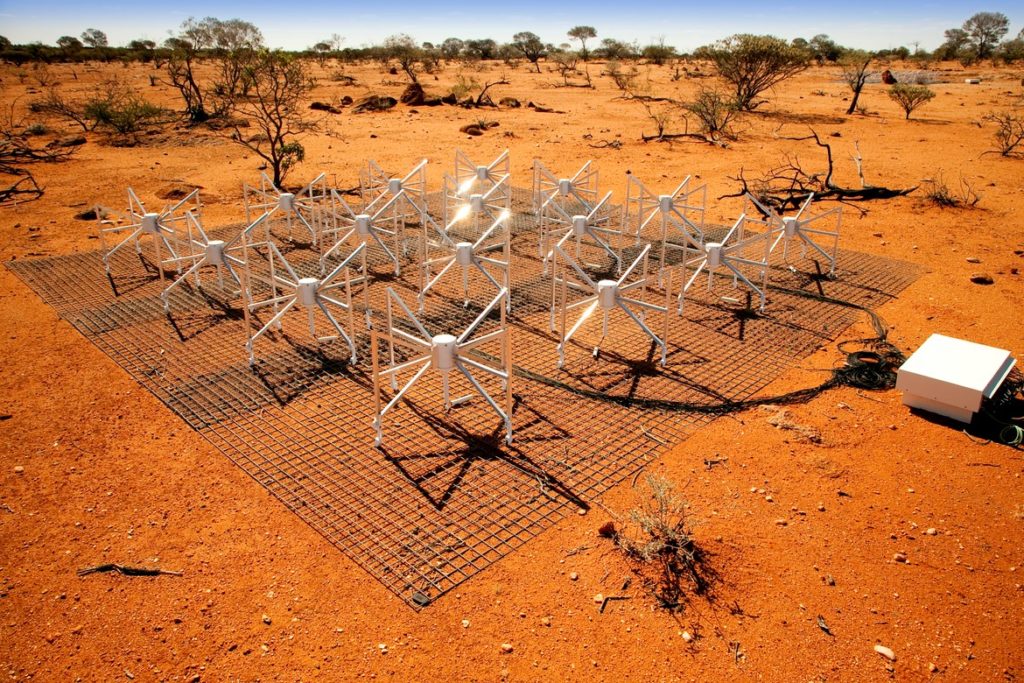

Consequently the drive for high sensitivity (plus the additional challenge presented by radio emissions’ long wavelengths) is why radio telescope antennas, whether used singularly or in an array, require such large collecting areas. The Arecibo radio telescope, for example, measures 305 meters across, whereas the ASKAP array, presently under construction in Western Australia, will ultimately be made up of 36 identical antennas, each measuring 12 meters in diameter, but comprising a single “distributed” instrument with a large effective collecting area. (To achieve the same sensitivity with a single dish, its area would have to be approximately one square kilometer—a diameter of about 1.1 km!) These arrays rely upon “aperture synthesis,” a type of interferometry, to combine the signals from the individual antennas to yield resolution equivalent to that of a telescope of diameter equal to the greatest distance between its individual elements.

Radio astronomy had its formal genesis in 1931 with Bell Lab’s Karl Jansky’s search for the source of radio noise that was feared might cause interference with Bell’s transatlantic communications system. With the help of a large antenna he constructed, he found it. It turned out to be near the center of the Milky Way (in the constellation Sagittarius), its extraterrestrial nature causing a bit of a stir. As there was nothing to be done about such “star noise,” Jansky’s bid to investigate the phenomena further was rejected, and he moved on to other projects at Bell. But Grote Reber, an amateur radio operator who learned of Jansky’s discovery, picked up where Jansky left off, building a 9.5 meter parabolic dish antenna in his back yard. With it, he compiled the first survey of the radio sky, which he completed in 1941. When astronomers realized that the new radio telescope could detect radio waves from the center of the galaxy—well beyond the range of optical instruments—the era of the great radio telescopes began. And with it, the discovery of a great many mysterious phenomena—not the least of which is the Milky Way’s black hole that Jansky unwittingly exposed in his 1931 RFI experiment!

Jansky, pictured with his seminal radio antenna, tuned to receive radio waves at a frequency of 20.5MHz. It had a diameter of approximately 100 ft. and stood 20 ft. tall. By rotating the antenna on a set of four Ford Model-T tires, the location of a received signal could be pinpointed.

Jesse Greenstein, another astronomer who recognized the importance of Jansky’s findings at the time, noted that analysis of radio waves emanating from space provided researchers with “10,000 times the information” they could get from optical astronomy. This would, indeed, revolutionize our understanding of the cosmos.

The next big discovery in the field came on November 28, 1967. Jocelyn Bell Burnell and Antony Hewish observed pulses separated by 1.33 seconds that originated from a fixed location. The pulses followed sidereal time, ruling out man-made radio frequency interference. And when observations with another telescope confirmed the event, it eliminated anything intrinsic to the telescope itself. It was, indeed, a great mystery. Burnell recalled, “We did not really believe that we had picked up signals from another civilization, but obviously the idea had crossed our minds, and we had no proof that it was an entirely natural radio emission. It is an interesting problem—if one thinks one may have detected life elsewhere in the universe, how does one announce the results responsibly?” With tongue in cheek, they dubbed the signal LGM-1, for “little green men.”

Radio astronomers would continue to discover myriad new sources of radio emissions, as well as reveal the cosmic microwave background radiation. And now, through the work of researchers at Australia’s Curtin University, an entirely new class of radio phenomena is being explored: one-off transient radio bursts whose duration is measured in milliseconds and are presently of unknown origin—events far more mysterious than Michelson’s luminiferous aether, and even rarer. In fact, only a handful of such transients have been recorded to date. But that’s about to change.

Radio Astronomy Enters New Computational Era

Dr. Nathan Clarke, a former research engineer with the International Centre for Radio Astronomy Research at Curtin University in Perth, led the development of a novel transient detection engine (dubbed Tardis) that captures and processes these fleeting signals in real time from the multiple beams of a telescope array. It’s no small challenge; doing so involves a series of computationally-intensive operations that begins with “cleaning up” the faint, noise-shrouded signals so that the evanescent events can be detected at all.

Unlike Michelson’s spurious “aether wind,” the very real “interstellar fog” has a decided effect on the propagation of electromagnetic waves. Narrow pulses, whether emanating from a known pulsar or ambiguous fast transient, are delayed, dispersed, and scattered by free electrons in the ionized intergalactic soup, reducing their detectability. The silver lining in this cosmic cloud is that there is information in those effects: lower-frequency radio waves travel through the medium at a slower rate (and consequently are more dispersed) than the higher-frequency wave components. The resulting delay in the arrival of the pulse at a range of frequencies provides the “dispersion measure” (DM), yielding important data about the source and distance of the event, as well as providing new insights into the interstellar medium itself.

“Fortunately,” Clarke explains, “there are several methods to partially or completely remove dispersion, a process referred to as de-dispersion.” Clarke’s team ultimately settled on the incoherent de-dispersion variant, which operates on the dynamic spectrum of each signal, measured over successive short intervals of time. “The dynamic spectrum is obtained by using a filter to separate the signal into many frequency channels and then squaring each channel’s signal and integrating it over the desired measuring interval. De-dispersion is then accomplished by applying delays to each channel to compensate for dispersion and summing the time-realigned channels.” The result? A nice, clean pulse.

But Clarke went further with the algorithm, summing individual spectral samples across both frequency and time, providing a signal-to-noise performance advantage over other de-dispersers that sum only across frequency. He also structured the algorithm for greater scalability so that a larger number of beams can be de-dispersed simultaneously, making for more efficient surveys of the skies.

Tackling a Tough Compute-intensive Challenge

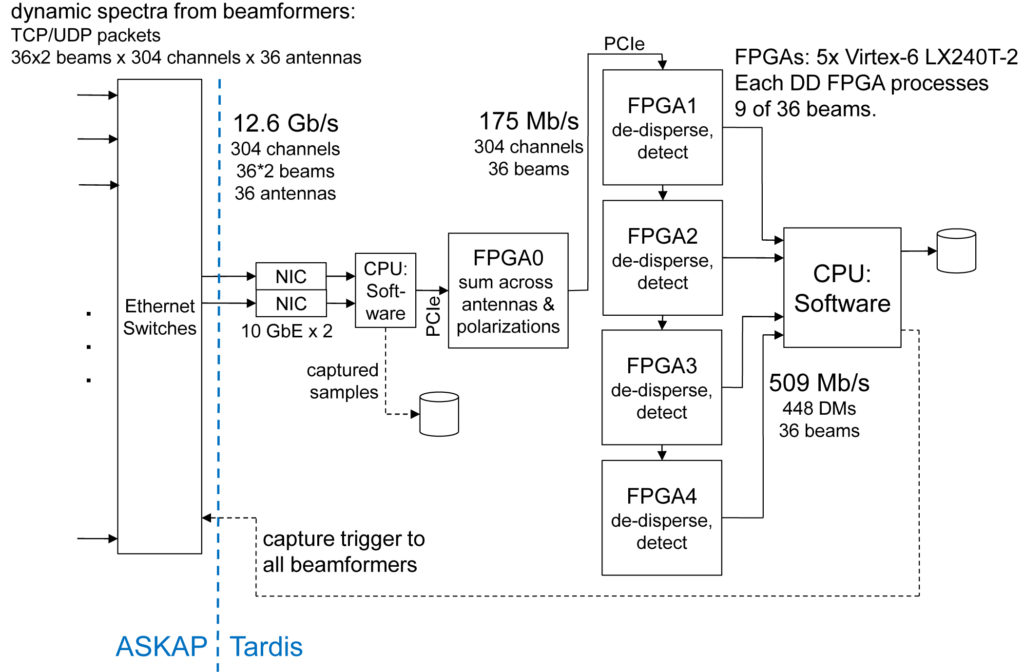

Because the DM is unknown—and can vary over a wide range of possibilities—determining the actual DM for a potentially detected pulse (with reasonable accuracy and hence good signal-to-noise ratio) requires a large number of parallel processes, each de-dispersing the signal for a particular “trial” DM. The ASKAP system, for example, will perform such 448 trials simultaneously on each of its 36 beams, producing a total of 16,128 de-dispersed data streams, with trials distributed across a sufficiently granular DM range to minimize S/N attenuation between them. Each de-dispersed stream is then searched for events matching the fast transient profile.

ASKAP is developing and proving technologies for the international Square Kilometre Array (SKA) telescope, which will start construction in Australia and South Africa in 2018.

Such massively parallel, computationally-intensive operations are well beyond the processing capabilities of many-core CPU-based computing systems; they are simply unable to keep up with the enormous volume of data streaming continuously in real time. And yet this is exactly the demand that fast transient detection places upon a computational system. The routine de-dispersion of pulsar signals, for example, never presented such a challenge.

“In fact,” Clarke explains, “the de-dispersion of pulsars has, up till recently, been done in software. That’s because the pulsar is always on; it’s producing regular radio impulses. You can record your data, take it away, and search for the pulses on your own time; you can fold the signal over at the pulse period to get better sensitivity detection. But with fast transients, you can’t do any of that; these are one-off events with no periodicity or predictability at all. And that is what has driven the need for radically accelerating the de-dispersion algorithm. Fast transients, because they are so irregular, fleeting, and weak, must be detected and de-dispersed in real time.”

Power Considerations

To this end, some fast transient detection pipelines have used Graphics Processing Units (GPUs), as they are powerful enough to allow real-time searching over a large parameter space. However, they have also proven to be impractical due to their exceptionally high power consumption. Field Programmable Gate Array (FPGA) technology on the other hand provides better performance over GPUs—and without the power penalty. “One of our motivations for using FPGAs” Clarke says, “is the need for systems that are very power-efficient. FPGAs have a factor of ten improvement in power over GPUs. That’s important, because these large radio telescopes are going to be with us for many decades, and there will be a great many FPGAs operating in the background, processing massive amounts of data. So the cost of development will fade into insignificance when you consider a system that will be running continuously for a very long period of time. The extra bit of effort up front pays off in huge power savings.”

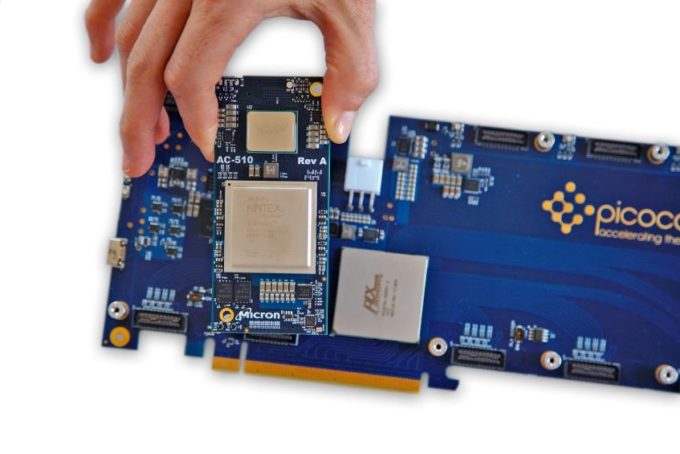

Pico Computing’s high-performance computing modules integrate a Xilinx UltraScale FPGA and Micron’s high-bandwidth Hybrid Memory Cube. Up to six of the business card-sized modules snap onto any of Pico Computing’s PCIe backplanes (with up to eight backplanes in a 4U rack), filling a single PCIe slot with considerable parallel processing density for compute-intensive applications.

The development team, working cooperatively with JPL, selected Pico Computing to provide the FPGA-based high-performance computing platform. But before diving into the details of the hardware accelerator, there were other motivating factors driving the choice of an FPGA-based system, most notably its simple scalability and ease of reconfiguration.

Meeting the Twin Challenges of Scalability and Flexibility

Because of its parallel architecture, the performance of the fast transient detector scales linearly with the number of FPGAs in the system. In the case of the ASKAP array, with up to 36 beams in its beam-former, Clarke was able to accommodate nine beams in each FPGA of the six-FPGA Tardis system, yielding a compact and economical solution comprising a single PCIe board. Equally important was the ease of reconfiguring the Tardis board for operation with different radio telescopes. This need became manifest when the scope of the ASKAP array development effort was scaled back, delaying the interface that had been planned for the transient detection system.

The Goldstone Deep Space Communications Complex.

“Unfortunately,” Clarke added, “that meant we’d have to try out our system on other telescopes.” The good news was that reconfiguring the Pico Computing-based Tardis system for different antennas was straightforward, requiring only the modification of the parameters needed to adapt to the new telescope. “Most of the design was captured in Verilog,” Clarke says, “and we parameterized the key variables so that we could switch to a new variant very quickly. We pretty much just change parameters to suit the telescope, recompile, and load the new bitfile into the FPGAs.”

The most important of these parameters is the SST (sample selection table), into which the user loads a set of pre-calculated dispersion profiles tailored to the observing frequency of the telescope and the range of DMs to be searched. Other parameters include the antenna’s field of view, minimum sensitivity, angular sensitivity, integration time, number of frequency channels, beams per FPGA, and other configuration details.

The first opportunity for reconfiguration was the deployment of the system at the Goldstone Observatory, part of the Goldstone Deep Space Communications Complex in the Mojave Desert, and operated for JPL. The 34-meter diameter single-dish antenna (dubbed DSS-13 “Venus” experimental station), unlike the 32-beam ASKAP, has no need for summing signals across multiple antennas, thus freeing all six of the system’s FPGAs to be used for de-dispersion.

A third variant processes up to six beams and 1,024 DMs, and is deployed at the Murchison Widefield Array (MWA) in Western Australia—a low-frequency radio array operating in the 80–300 MHz range. Clarke expects to see much more dispersion at those lower frequencies, which necessitated the increase in de-dispersion trials. “In the original ASKAP version,” Clarke explains, “we could support many separate beams simultaneously in one FPGA, de-disperse them, and search for these fast transients. But the MWA required many more de-dispersion trials and frequency channels to detect the pulses. Consequently we could only fit a single beam into an FPGA.” The advantage, though, is MWA’s exceptionally large field-of-view, which lends itself well to intercepting isolated, short-duration pulses.

The Murchison Widefield Array (MWA) is a low-frequency radio telescope operating between 80 and 300 MHz. It is located at the Murchison Radio-astronomy Observatory (MRO) in Western Australia, the planned site of the future Square Kilometre Array (SKA) lowband telescope, and is one of three telescopes designated as a Precursor for the SKA.

If the dispersion measures associated with the fast transients are to be believed, then these exotic events originate from sources billions of light-years away, well beyond our galaxy. Since the first such transient was detected in 2007 at the Parkes Observatory’s 64-meter telescope in Australia, these mysterious events have captured astronomers’ imaginations. In fact, they are so strange that many initially doubted their extraterrestrial origin, chalking the phenomena up to artifacts of the telescope itself. But recent detections of similar signals with other telescopes, most notably at Arecibo, have added weight to their existence and have ignited efforts to determine what is causing them. And with new, real-time instruments emerging to capture the events in large numbers, astronomers can begin to study and characterize them more rigorously, solving the next great cosmic mystery. Clarke, however, still looks forward to the full realization of the ASKAP array, as it will capture radio signals with unprecedented sensitivity over large areas of sky. With a wide instantaneous field-of-view, the ASKAP/Tardis combination will be able to survey the whole sky vastly faster than is possible with existing radio telescopes.

A Closer Look: Inside the Fast Transient Detection System

Originally designed for the ASKAP array, the Tardis fast transient detection system consists of a host computer equipped with a commercial FPGA platform provided by Pico Computing. The computer receives dynamic power spectra from all antennas via a dual 10-GbE network interface card (NIC). The FPGA platform consists of a Pico Computing PCI Express board supporting an array of up to six Pico Computing FPGA plug-in modules. The board connects to the host through a PCIe expansion slot and provides an 8-lane PCIe fabric interconnecting the FPGA modules.

One FPGA is used to combine beams across antennas and polarizations, and four FPGAs implement the de-dispersion and transient detection functions, with each processing nine of the 36 combined beams. The summing FPGA delivers combined beam data to each of the other FPGAs using a daisy-chain interconnection.

Software running on the host CPU is mainly responsible for managerial tasks, such as communicating with the telescope control system to obtain relevant operational parameters, and configuring and initializing the FPGAs. The host software can also record the de-dispersed time series to disks. For each tentative pulse detection, a capture trigger signal is generated by the software and sent to all beamformer assemblies. The saved voltage samples can then be downloaded from the beamformer assemblies via the same Ethernet switches and recorded to disk.

“The Pico Computing hardware accelerator is a very good platform,” Clarke adds. “It was smooth going getting it up and running.”

For more information on the Tardis system, see “A Multi-beam radio Transient Detector with real-time De-dispersion over a Wide DM range”, Journal of Astronomical Instrumentation, Vol. 3, no. 1, 2014.

For Further Reading see The Next Telescopes